Lecture 5: Principal Components Analysis

Covariance: A Reminder

The sample covariance for between the and coordinates is given by:

Where is the sample mean

The sample covariance is usually represented as a real symmetric matrix

Motivation

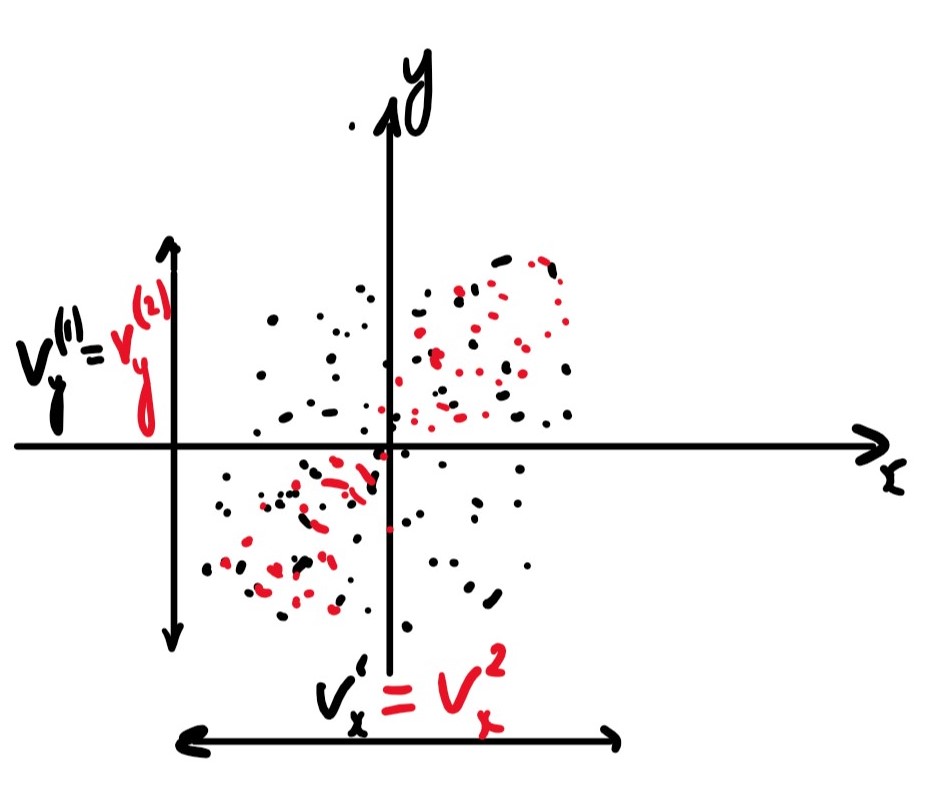

The two datasets shown in the figure above, in red and black, have an equal variance in both the and the direction, They also have the same mean but clearly have different correlation.

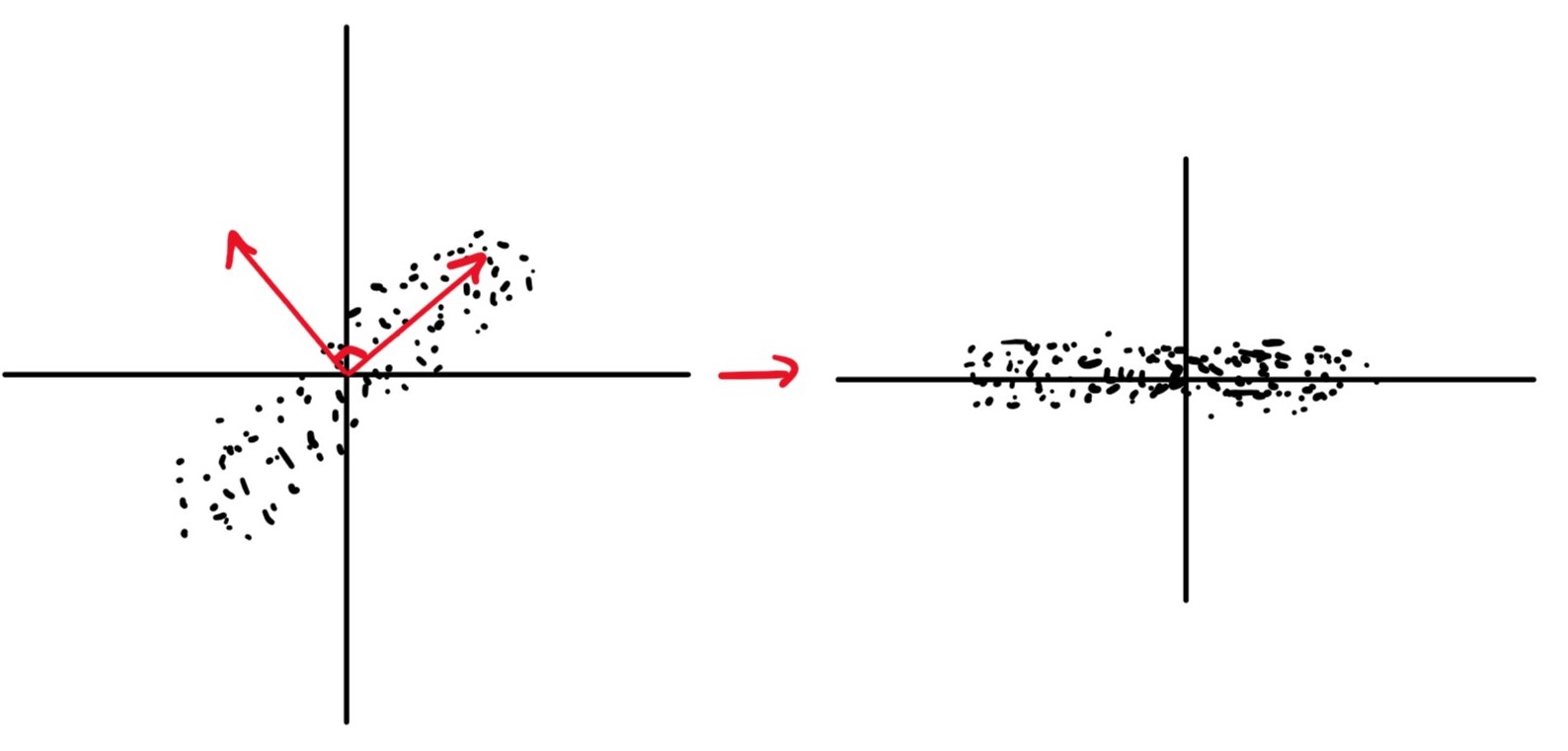

Conversely here we see data with the same shape but different covariance, with the LHS having positive covariance and the RHS having zero covariance. The data on the RHS is nicer in so far as the properties of the data are clearer. This is obvious when looking a the RHS data but not so for the LHS, however, if we were to interpret the LHS using a different basis (marked in red) we could represent it as the same as the RHS. With our data in the form of the RHS, we can see that our dataset is essentially a 1D set with noise in the dimension.

Overview

How do we find the axis that are best at representing the space? The aim of Principal Component Analysis is to answer this.

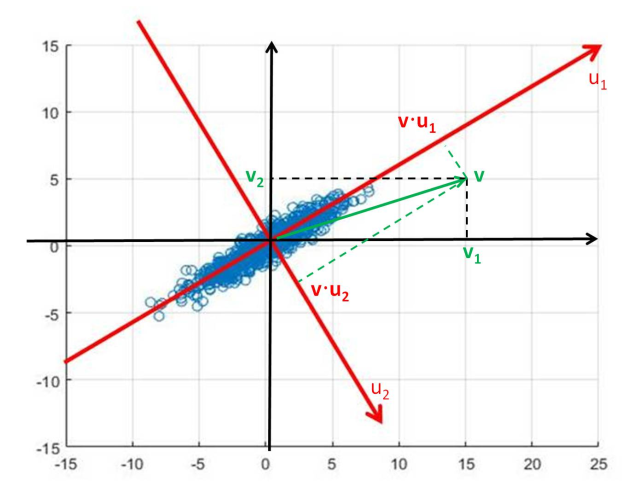

We begin by finding the axis that describes the direction of maximum variation in the data, shown as in the above figure.

To find this we start with a potential value for and:

- Project data onto

- Calculate the variance of the data in the direction of

- Maximise the variance with respect to

An issue with this is that must be a unit vector. This gives us, essentially, a constrained optimisation problem. We can solve this using Lagrange Multipliers which are outside the scope of this module.

However, we know that the solution to such a problem is that is the eigenvector of the covariance matrix corresponding to the largest eigenvalue.

The eigenvector of the next largest eigenvalue corresponds to the direction of the next biggest variation and so on.

The eigenvalue shows the variation in the direction of the corresponding eigenvector.

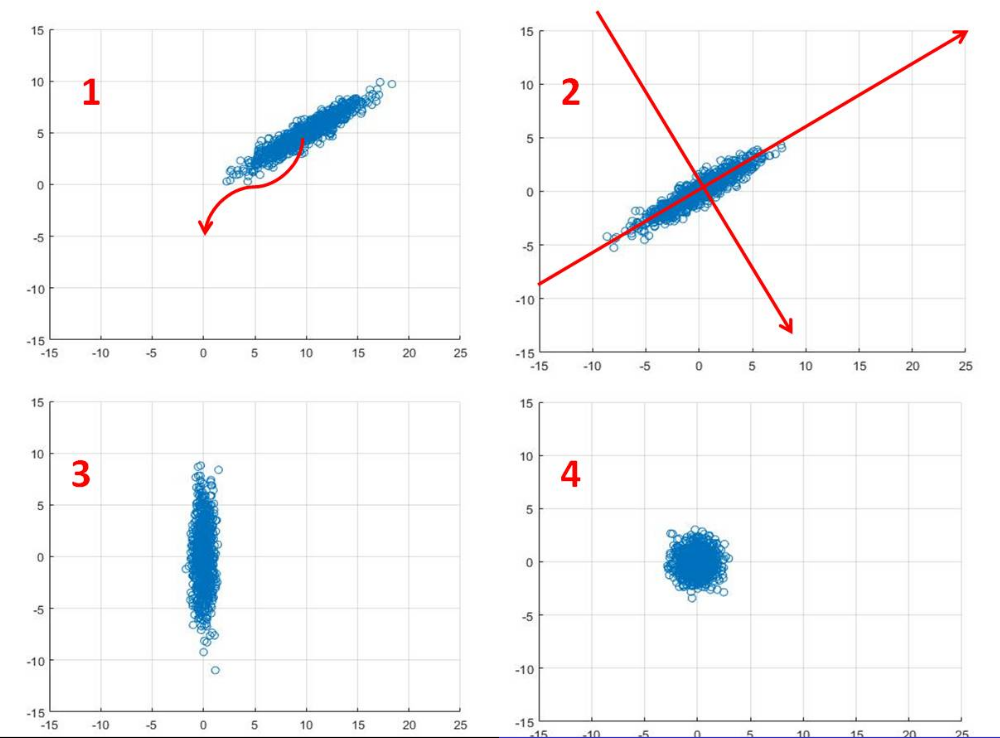

Principal Components Analysis is a tool to reveal the structure of a dataset

To carry out PCA, apply eigenvector decomposition to a covariance matrix

Where is a diagonal matrix and is an orthogonal matrix

The columns of are a new basis for . Basis vectors point in directions of maximum variance of

The eigenvalues is the variance of the data in the direction of

PCA Pictorially

- Centre data around the origin (error in digram), by calculating the mean and subtracting from each datapoint

- a. Calculate covariance matrix,

b. Perform eigenvector decomposition to , vectors in diagram show resulting eigenvectors - Plot data with respect to new coordinate system, resulting plot has 0 covariance.

- (Optional) Normalise out the variance to transform data into gaussian plot